- Print

- DarkLight

Key Indicators: Monitoring and Assessing Forecasting Performance

Companies use a variety of methods and metrics to track and assess the health of their forecasting capabilities. Two of the most widely used, and most informative metrics are Forecast Accuracy (FA) and Forecast Bias. Using these two metrics, a company can identify trends in forecast performance, conduct root cause analysis to determine drivers of forecasting performance, and implement action plans to improve forecasting performance.

What is Forecast Accuracy (FA)?

Forecast Accuracy measures the degree to which an organization is able to successfully predict, at a specific point in time (often referred to as the “lag”), future demand for their product portfolio at an individual SKU level. In other words, Forecast Accuracy measures how well we can predict what SKUs will be sold during a specific timeframe by comparing the forecast captured at a specific point in time (the lag) to the actual sales. (Note: actual sales may be defined as either actual customer order quantity or actual shipped quantity). Forecast Accuracy tells us how well we understand when and in what product mix customer demand will occur. While Forecast Accuracy is a backward-looking metric, on-going monitoring of FA results identifies trends that may be predictive of future risks and opportunities both for the commercial and operational sides of a business.

How is Forecast Accuracy Calculated?

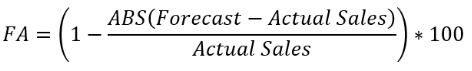

The calculation for Forecast Accuracy is:

Example

| SKU | Forecast (LagX) | Actual Sales | Absolute Variance | Forecast Accuracy |

|---|---|---|---|---|

| A | 100 | 75 | 25 | ( 1-(ABS(100-75))/75) * 100 = 67% |

| B | 450 | 1,000 | 550 | (1-(ABS(450-1,000))/1,000) * 100 = 45% |

| C | 230 | 210 | 20 | (1-(ABS(230-210))/210) * 100 = 90% |

| D | 1,000 | 800 | 200 | (1-(ABS(1,000-800))/800) * 100 = 75% |

| E | 350 | 195 | 155 | (1-(ABS(350-195))/195 * 100) = 21% |

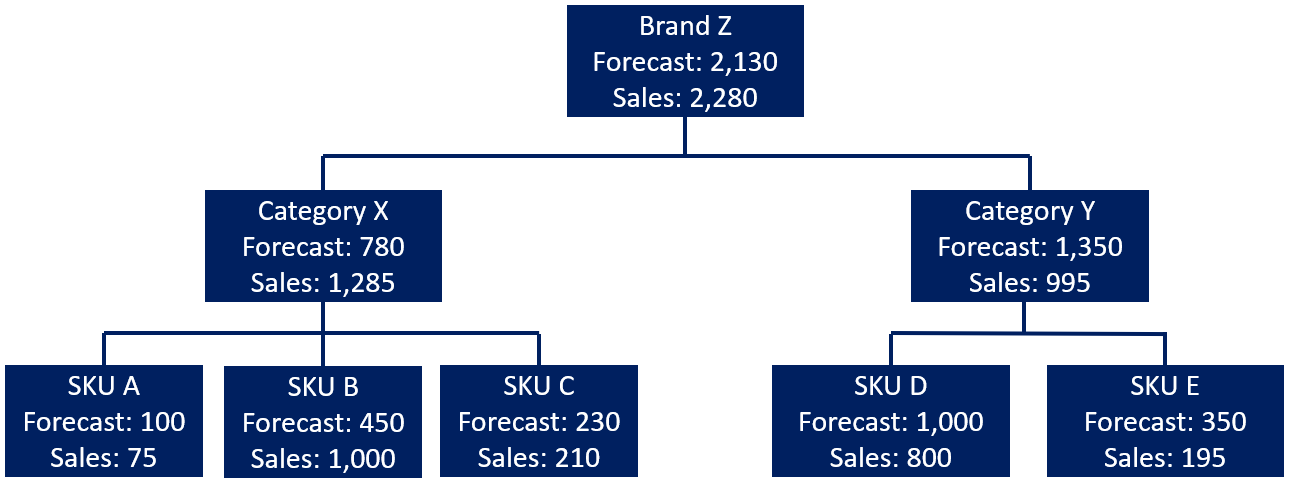

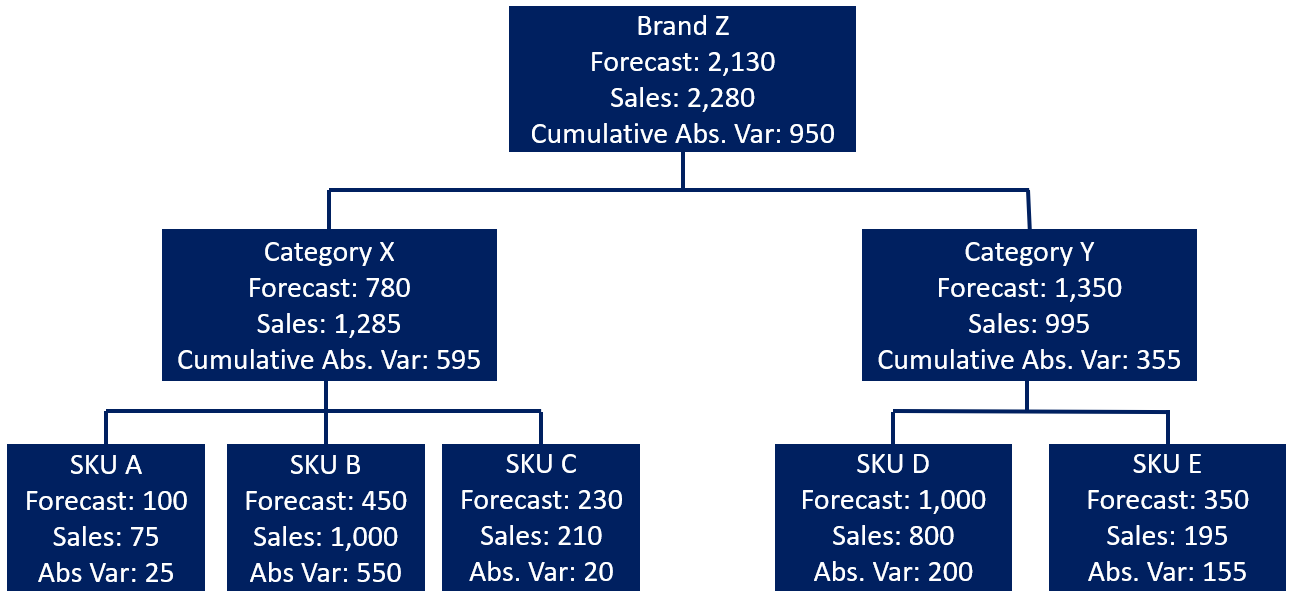

When calculating FA at higher levels of the product structure, the variances at SKU level are cumulative, meaning that an over forecast against one SKU is not offset against an under forecast against another SKU.

For example, let’s assume that SKUs A, B, and C belong to Category X. SKUs D and E belong to Category Y. Both Category X and Category Y belong to Brand Z.

If we calculate the Category FA and Brand FA simply as:

| Forecast (LagX) | Actual Sales | Absolute Variance | Forecast Accuracy | |

|---|---|---|---|---|

| Category X | 780 | 1,285 | 505 | (1-(505/1285)) * 100 = 61% |

| Category Y | 1,350 | 995 | 355 | (1-(355/995)) * 100 = 64% |

| Brand Z | 2,130 | 2,280 | 150 | (1-(150/2280)) * 100 = 93% |

…we are overstating the forecast accuracy and overlooking any product mix issues we may have in our forecasting performance (which are clearly evident when we look at the SKU level chart above). We can address this by aggregating the SKU level absolute variances.

| Forecast (LagX) | Actual Sales | Absolute Variance | Forecast Accuracy | |

|---|---|---|---|---|

| Category X | 780 | 1,285 | 595 | (1-(595/1285)) * 100 = 54% |

| Category Y | 1,350 | 995 | 355 | (1-(355/995)) * 100 = 64% |

| Brand Z | 2,130 | 2,280 | 950 | (1-(950/2280)) * 100 = 58% |

What is Forecast Bias?

Forecast Bias measures an organization’s tendency to consistently over or under forecast against actual sales and measures to what degree the bias exists. Unlike Forecast Accuracy which tells us how well we understand when and in what product mix customer demand will occur, Forecast Bias makes us aware of our tendency to be either overly optimistic or pessimistic in our forecasting performance. Like Forecast Accuracy, Forecast Bias is a backward-looking metric. On-going monitoring of Bias results identifies trends on which we can conduct root cause analysis and the implement action plans to reduce bias in our forecasts.

How is Forecast Bias Calculated?

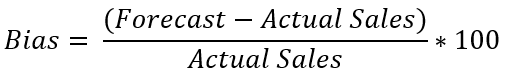

The calculation for Forecast Bias is:

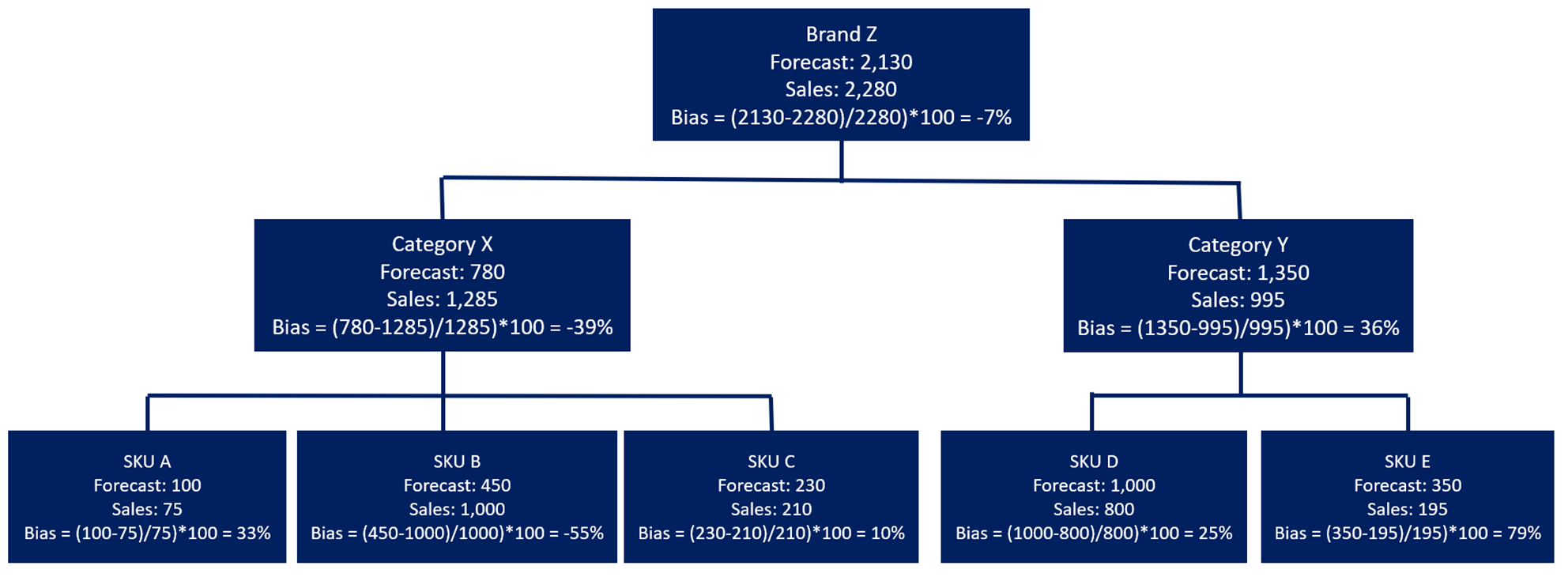

Using the same example from above, Forecast Bias is calculated as…

Unlike the Forecast Accuracy calculation, Forecast Bias does not use the absolute variance between forecast and actual sales, therefore, an under forecast on one SKU is offset by an over forecast on another SKU as we move up the hierarchy

Why Is It Important to Measure Forecast Accuracy and Forecast Bias?

Poor forecasting can be problematic not only within Supply Chain / Operations but enterprise wide.

| Metric Result | Potential Root Causes | Potential Impacts to an organization |

|---|---|---|

| Low Forecast Accuracy (forecast lower than actual sales) | Wrong SKU mix - Wrong timing of customer demand – Missing promotional activity - Unanticipated changes in marketplace / consumer behaviour changes - Bias | Service level failure - Customer fines - Loss of sales from out of stocks - Cost of production (expedited procurement, transportation, and manufacturing scheduling changes to replenish OOS, manufacturing overtime) |

| Low Forecast Accuracy (forecast higher than actual sales) | Wrong SKU mix - Wrong timing of customer demand – Bias - Changes in promotional activity - Competitor activity in marketplace - Unanticipated consumer behaviour changes | Inventory costs (cost to carry, obsolescence) - Cost of production (manufacturing scheduling changes to delay replenishment, cost to carry raw materials, redeployment of scheduled human resources) |

| Variable Forecast Accuracy (significant swings in FA results) | Wrong SKU mix - Wrong timing of customer demand - Missing promotional activity - Changes in promotional activity - Unanticipated changes in marketplace / consumer behaviour changes - Bias | Service level failure - Customer fines - Loss of sales from out of stocks - Cost of production (expedited procurement, transportation, and manufacturing scheduling changes to replenish OOS, manufacturing overtime) - Inventory costs (costs to carry (including carrying costs of higher safety stock requirements), obsolescence) |

| Positive Bias (consistent forecast higher than actual sales) | Over optimism on new product launches - Over optimism on promotional activity - Inflating forecast to mitigate potential service level failures - Consistent and continuous service level failures (if using actual shipments in calculating bias) | Inventory costs (cost to carry, obsolescence) - Cost of production (manufacturing scheduling changes to delay replenishment, cost to carry raw materials, redeployment of scheduled human resources) |

| Negative Bias (consistent forecast lower than actual sales) | Changes in marketplace i.e. customer expansion (new store openings), competitor exiting category, changing consumer behaviours | Service level failure - Customer fines - Loss of sales from out of stocks - Cost of production (expedited procurement, transportation, and manufacturing scheduling changes to replenish OOS, manufacturing overtime) |

By creating a Forecasting Performance Dashboard, an organization can monitor and assess Forecast Accuracy and Forecast Bias to identify trends in forecast performance. Demand Planning can conduct root cause analysis to determine drivers of forecasting performance and work with their Sales, Marketing, and Operations partners to proactively implement action plans to mitigate risks, take advantage of opportunities, and improve forecasting performance.